Normal distribution (it is Gauss's distribution) has extreme character. To it all other distributions under certain conditions meet. Therefore also some characteristics of normal random variables are extreme. It will also be applied at the answer to a question.

Instruction

1. For the answer to a question whether the random amount is normal, it is possible to attract the concept entropy of N (x) arising in the theory of information. The fact is that any discrete message formed of n of symbols X = {x ₁, x ₂, … xn }, it is possible to understand as the discrete random variable set by a number of probabilities. If the probability of use of a symbol, for example x ₅ is equal P ₅, then also the probability of an event of X = x ₅ is same. From terms of the theory of information we will take still a concept of amount of information (more precisely than own information) to I (xi) = ℓog (1/P (xi))=-ℓogP(xi). For brevity records put P (xi) = Pi. Logarithms undertake on the basis 2 here. In concrete expressions such bases do not register. From here, by the way, and binary unit (binary digit) – bit.

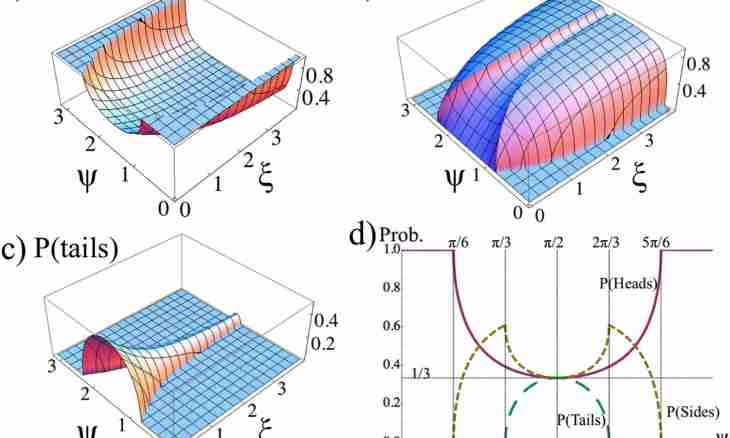

2. The entropy is an average amount of own information in one value of a random variable H(x) = M [-ℓogPi]=-∑ Pi ∙ℓ ogPi (summation is conducted on i from 1 to n). It also continuous distributions possess. To calculate entropy of a continuous random variable, present it in the discrete form. Break the site of the area of values into small intervals kh (a quantization step). As possible value take the middle corresponding kh, and instead of its probability use an element of Pi≈w (xi) ∆x Square. The situation is illustrated by fig. 1. In it, up to small details, Gauss's curve which is graphical representation of density of probability of normal distribution is represented. Here the formula of density of probability of this distribution is given. Attentively consider this curve, compare it to those data which you have. Perhaps the answer to a question already cleared up? If is not present, it is worth continuing.

3. Use the technique offered on the previous step. Make a number of probabilities now of a discrete random variable. Find its entropy and limit transition at n →∞ (∆x→0) return to continuous distribution. All calculations are submitted in fig. 2.

4. It is possible to prove that normal (Gaussian) distributions have the maximum entropy in comparison with all others. Simple calculation on a final formula of the previous step H(x) = M [-ℓogw(x)], find this entropy. No integration is required. There are enough properties of expected value. Receive H(x) = ℓog ₂ (σх √ (2πe)) = ℓog ₂ (σх) + ℓog ₂ (√ (2πe)) ≈ℓ og ₂ (σx) +2.045. It is a possible maximum. Now using any data, about the distribution which is available for you (beginning from simple statistical set) find its dispersion Dx= (σx)². You will substitute calculated σx in expression for the maximum entropy. Calculate entropy of the random variable of N investigated by you (x).

5. Make the relation of H (x)/Hmax (x)=ε. Independently choose probability ε ₀ which almost equal unit can consider at making decision on proximity of the distribution which is available for you and normal. Call it, say, credibility probability. Great values, than 0.95 are recommended. If it turned out that ε>ε ₀, then you (with probability not less ε ₀) deal with Gauss's distribution.